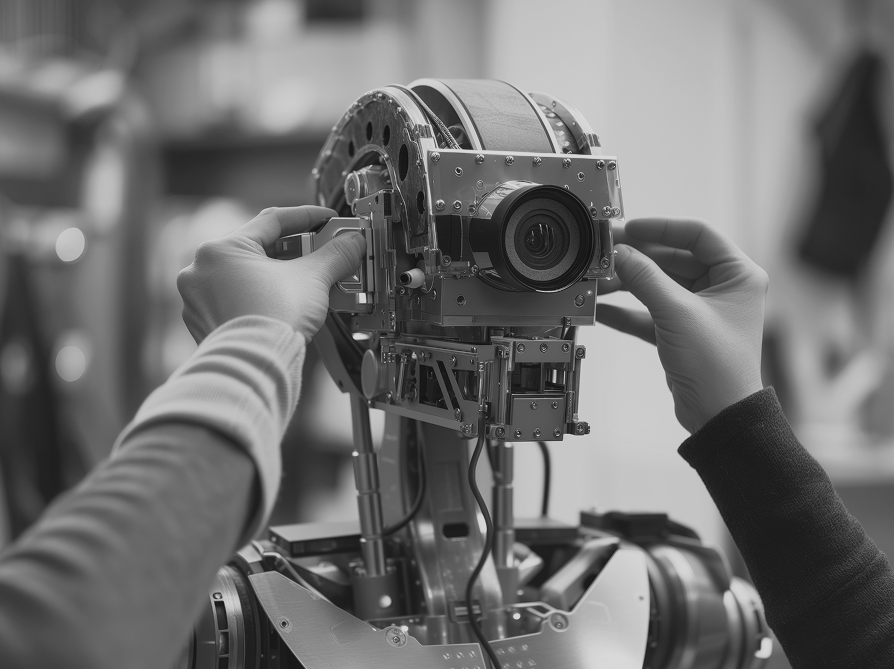

Calibration Station

- Calibrates any type of optics

- Captures millions of reference points per pose

- ML-based filtering of corrupted data for the best calibration results

- Exports to industry-standard formats such as OpenCV, or to a pixelwise view-ray lookup table

- Advanced quality assessment tools

- Suitable for R&D, DIY projects, and small-batch productionMetrological calibration completed at identical positions, in the identical environment

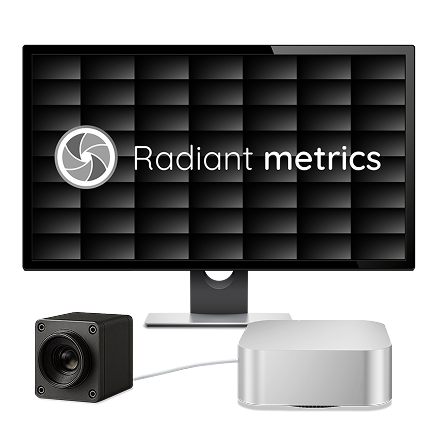

- Flat pre-calibrated screen

- Mac Mini or similar PC

- Preinstalled Radiant Metrics Calibration Studio

- A camera for testing and maintenance

- A 3D reference point is determined for every camera pixel

- Each point comes with an unbiased uncertainty estimate

- Per-pixel photometric sensitivity characterization

- For a 12 MP camera, up to 12 million reference points per pose

- Supports any camera model: OpenCV, ROS, Halcon, custom free-form (pixelwise view-ray LUT), etc.

- Supports any optical design: perspective, fisheye, catadioptric, telecentric, and more

- Quantified consistency, reliability, and repeatability: automated outlier rejection, train/test split, k-fold cross-validation

- Smart model selection: the system chooses the optimal camera model based on task-specific tolerances and detects over/underfitting

- Data quality: visual maps of decoding uncertainties

- Model-to-data consistency:

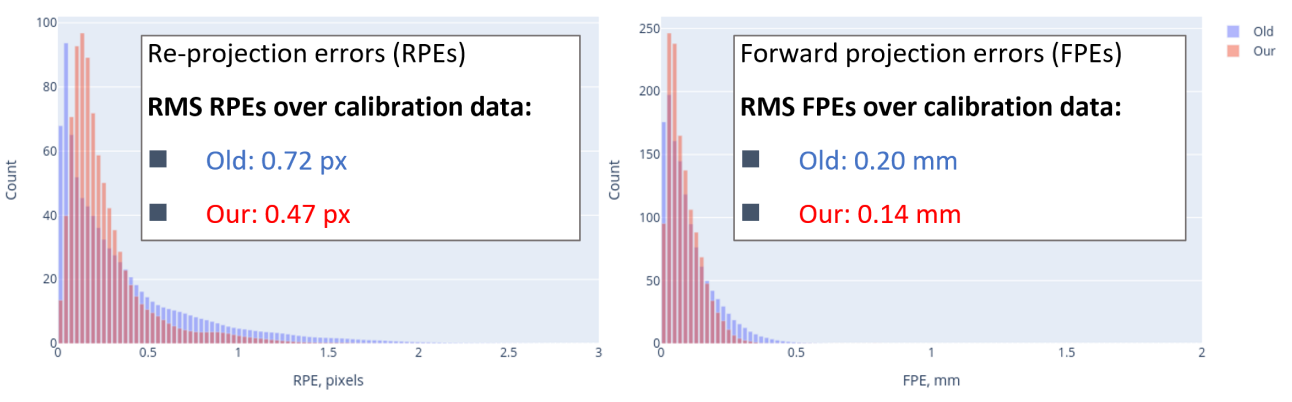

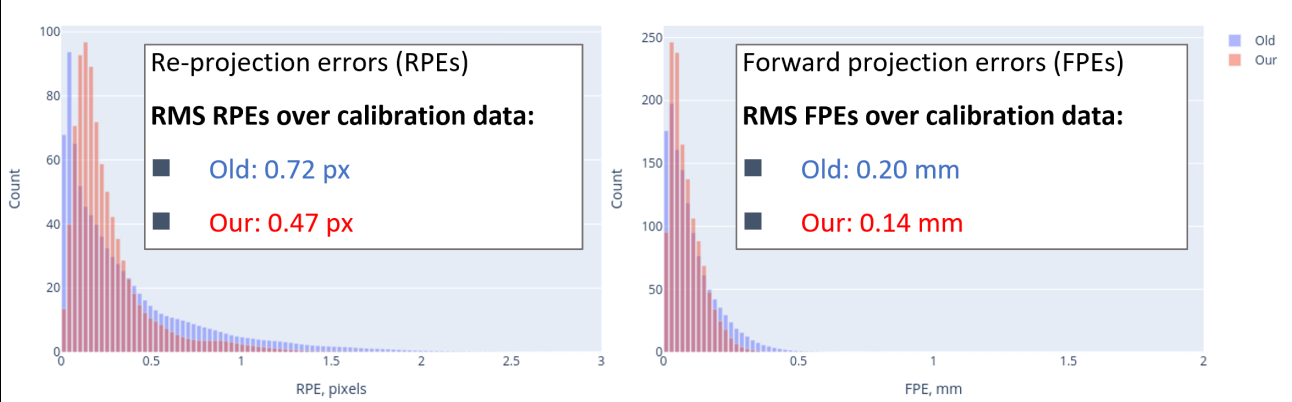

- RMS reprojection errors (RPE)

- RPE distribution maps

- RMS forward projection errors (FPE)

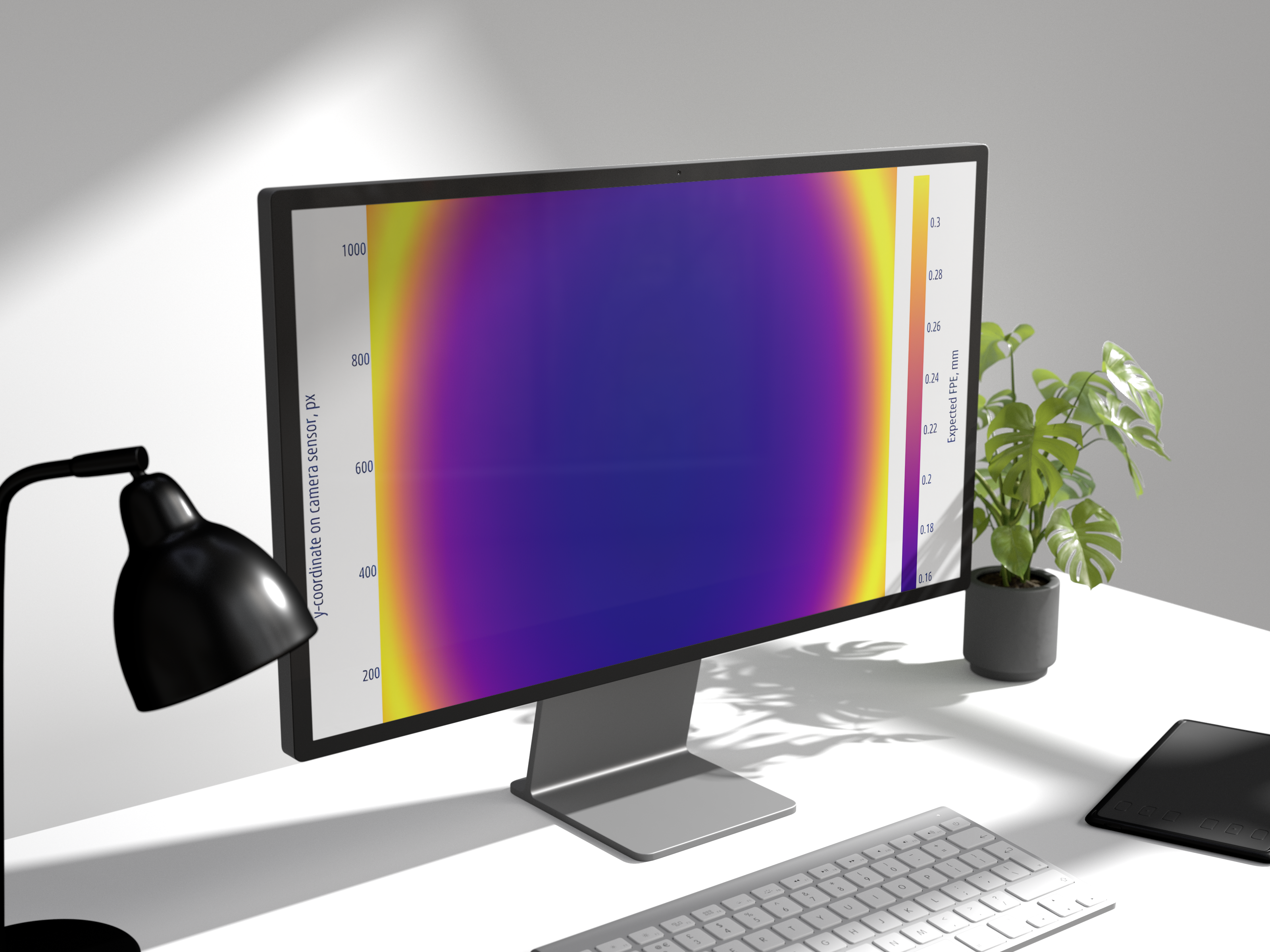

- FPE distribution maps

- Model reliability: RPE/FPE measured on test data

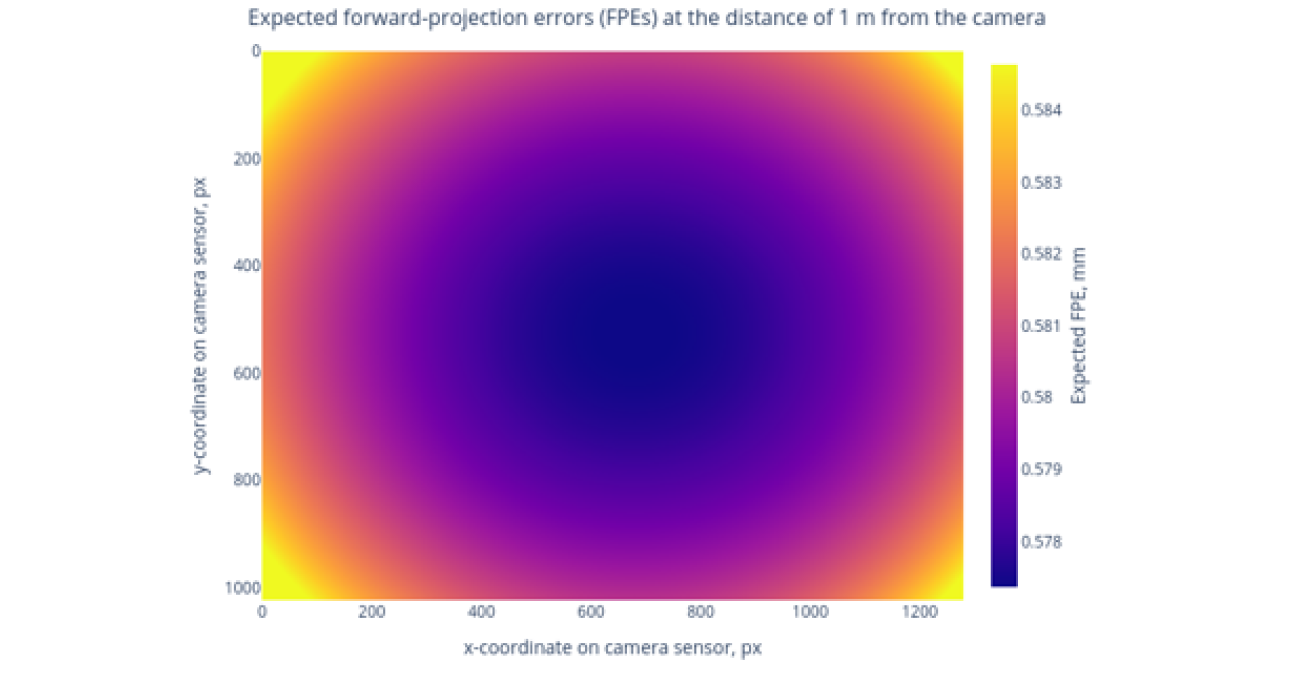

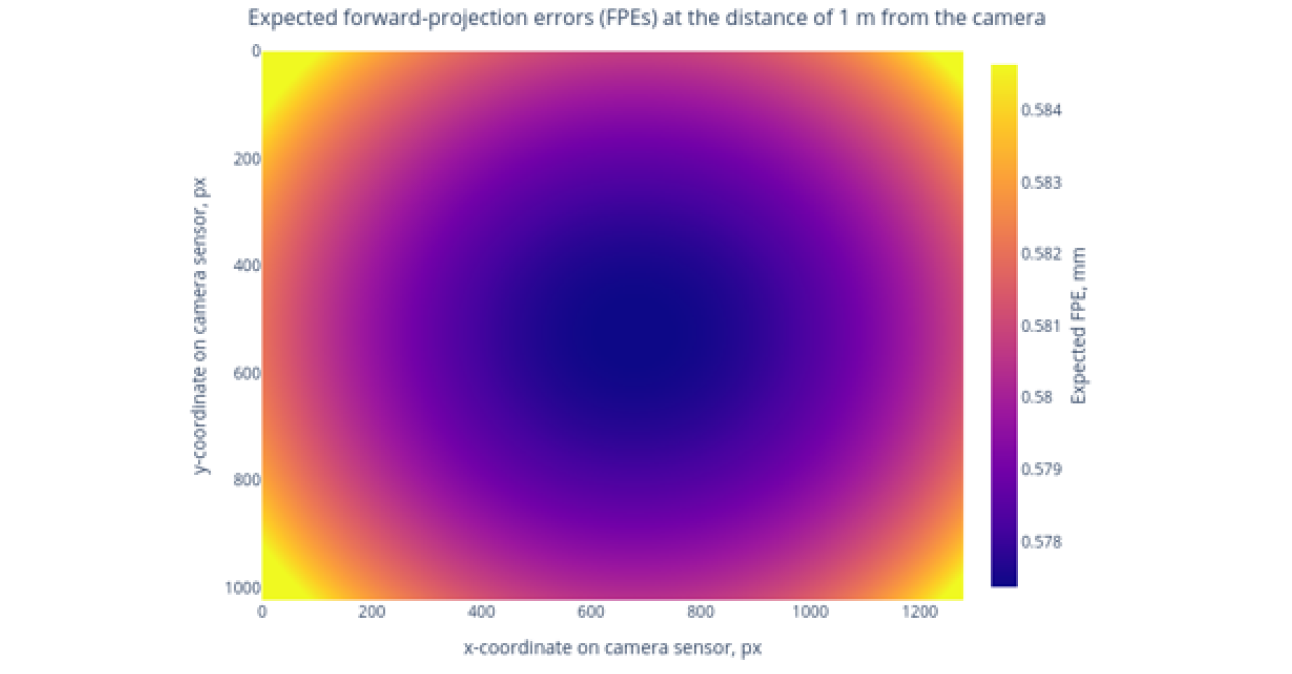

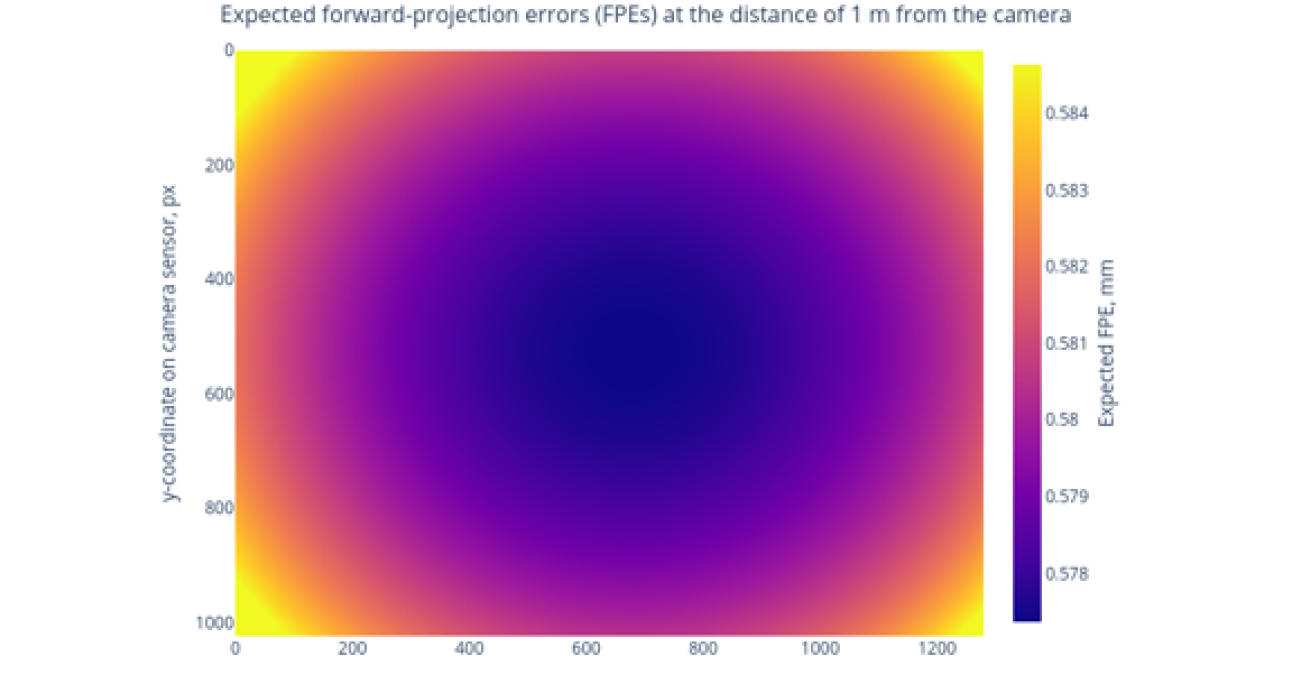

- Model repeatability: maps of expected FPE — uncertainty of each pixel's view ray at a given distance

for whom

Full FOV utilization:

Uniformly accurate SLAM and triangulation in the entire image of wide angle cameras.

Precise 3D information for AI:

well-aligned real-world and model imaging geometries facilitate faster training and more accurate inference.

Stereo:

Support for excellent stereo caliration.

Re-calibration:

Calibration can be performed in a lab on in the field, with inexpensive hardware.

Better image undistortion:

Accurate undistortion in frame corners enables the end user to capture more details in a larger field of view.

Reduced pixel registration errors:

Accurate imaging geometry enables seamless zoom across multiple cameras.

Non-uniform color calibration and compensation:

Matching color sensitivity profiles across multiple cameras.

Full FOV utilization:

Uniformly accurate triangulation in the entire image of wide angle cameras.

Replacement of expensive industrial cameras:

Commodity cameras with an accurate calibration can deliver comparable quality for 3D applications.

Universal and flexible approach:

Our solution replaces static targets with consumer-grade monitors, supports various optics, and adapts calibration costs to each task. It delivers both photometric and geometric calibration with detailed quality metrics.

why choose us

use cases

- When the user zooms in on an image, the system switches between cameras. Discrepancies in geometry and color response between cameras lead to “jumps” and sudden color changes.

- Proper calibration of all cameras (both geometric and colorimetric) enables efficient correction and compensation schemes.

- Our calibration significantly reduces errors compared to the state of the art and requires no expensive equipment.

- When a robot arm grips objects based on camera input, positioning errors increase near the boundaries of the working area due to poor calibration quality. As a result, the robot may miss or damage the objects.

- Our free-form model calibration ensures uniform accuracy across the entire frame.

- Optical designers often use industrial cameras and high-end optics for demanding computer vision tasks. Costs can become significant, especially for multi-camera setups.

- Mass-produced cameras with inexpensive (e.g., plastic) lenses may rival such systems in image quality but typically exhibit greater distortion.

- By calibrating a free-form model that accurately captures these distortions, we can achieve performance equivalent to high-end systems in the final application.

FAQ

still have questions?